Implementing OAuth 2.0 access tokens

Developers of OAuth 2.0 servers eventually face the question how to implement the access tokens. The spec doesn’t mandate any particular implementation, and there is a good reason for that – the client treats them as opaque strings that are passed with each request to the protected resource / web API. It is up to the server to decide how they are created and what information gets encoded into them. The OAuth 2.0 RFC does however mention the two possible strategies:

-

The access token is an identifier that is hard to guess, e.g. a randomly generated string of sufficient length, that the server handling the protected resource can use to lookup the associated authorisation information, e.g. by means of a network call to the OAuth 2.0 server that issued the authorisation.

-

The access token self-contains the authorisation information in a manner that can be verified, e.g. by encoding the authorisation information along with a signature into the token. The token may also be optionally encrypted to ensure the encoded authorisation information remains secret to the client.

What are the pros and cons of each strategy?

Identifiers as access tokens, authorisation resolved by lookup

- Pros:

- Short access token strings. A 16 bit value with sufficient randomness to prevent practical guessing should suffice for most cases.

- The authorisation information that is associated with the access token can be of arbitrary size and complexity.

- The access token can be revoked with almost immediate effect.

- Cons:

- A network request to the OAuth 2.0 server is required to retrieve the authorisation information for each access token. The need for subsequent lookups, up to the access token expiration time, may be mitigated by caching the access token / authorisation pairs. Caching, however, will be at the expense of increasing the worst case time scenario for token revocation.

Self-contained access tokens, encoded authorisation protected by signature and optional encryption

- Pros:

- No need for a network call to retrieve the authorisation information as it’s self-contained, so access token processing may be significantly quicker and more efficient.

- Cons:

- Significantly longer access token strings. The token has to encode the authorisation information as well as the accompanying signature. Encrypted tokens become longer still.

- To enable tight revocation control, the access tokens should have a short expiration time, which may result in more refresh token requests at the OAuth 2.0 server.

- The server handling the protected resource must have the necessary tools and infrastructure to validate signatures and perform decryption (if the access tokens are encrypted) and to manage the required keys (shared or public / private, possibly certificates) for that.

How do the two approaches compare in practice?

Network lookups practical only when the OAuth 2.0 server is on the same host or network segment

We first ran a number of tests against our Connect2id server which keeps track of the issued access and refresh tokens and their matching authorisations. Each authorisation is checked by passing the access token to the RESTful web API of the server, which if valid returns the matching authorisation as a JSON object:

{

"iss" : "https://c2id.com/op",

"iat" : 1370598200,

"exp" : 1370600000,

"sub" :"alice@wonderland.net",

"scope" : "openid profile email webapp:post webapp:browse",

"aud" : ["http://webapp.com/rest/v1", "http://webapp.com/rest/v2"]

}

When the application server handling the protected resource and the OAuth 2.0 Server are situated on the same LAN segment, the RESTful request to check the access token takes about 5 to 10 milliseconds.

In cases when the RESTful request has to go out and across the internet (the OAuth 2.0 Server was installed in the AWS cloud) the time to retrieve the authorisation information increased to about 80 – 100 milliseconds.

This simple test shows clearly that using a lookup to check the token authorisation is only practical when the token consumer (the application) and the OAuth 2.0 server reside on the same host or LAN, or perhaps within the same data centre. Otherwise the response time can become so large as to render the entire application unusable.

Signed self-contained access tokens enable sub-millisecond verification, longer keys can however significantly affect processing

We implemented self-contained access tokens by using JavaScript Object Signing (JWS) with an RSA algorithm on the JSON object that represents the authorisation. For that we utilised the open source Nimbus JOSE + JWT library.

The resulting token (from the above example) is approximately 500 characters in size (with line breaks for clarity):

eyJhbGciOiJSUzI1NiJ9.eyJleHAiOjEzNzA2MDU0NDgsInN1YiI

6ImFsaWNlQHdvbmRlcmxhbmQubmV0Iiwic2NvcGUiOiJvcGVuaWQ

gcHJvZmlsZSBlbWFpbCB3ZWJhcHA6cG9zdCB3ZWJhcHA6YnJvd3N

lIiwiYXVkIjpbImh0dHA6XC9cL3dlYmFwcC5jb21cL3Jlc3RcL3Y

xIiwiaHR0cDpcL1wvd2ViYXBwLmNvbVwvcmVzdFwvdjIiXSwiaXN

zIjoiaHR0cHM6XC9cL2MyaWQuY29tXC9vcCIsImlhdCI6MTM3MDY

wMzY0OH0.dcLjGbBiijHSF0YGLHY0GGdXqQOlAbiBli4es7dgoOc

9jqKKUkqG2d9lztku82dLDq-xWvU2RDuhDtd-luSyLEQrrMGdAnW

zQwWTPw_RVKDzK8NdRuUbx7pwj8cayhFBsgJujmdxN_qOyvjEdIE

mfdEnprESkwNZo87OO_RMxeY

For the RSA signatures we generated 1024 bit keys which is the minimum RSA recommendation for corporate applications. We also did a round of tests with 2048 bit keys, which is the minimum recommended size specified in the JOSE specification.

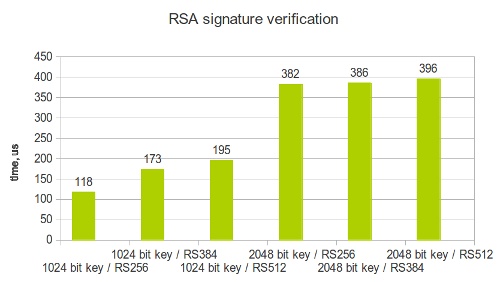

We created a benchmark to measure the average time to validate an RSA signature after the access token has been parsed to a JWS object. The code was run on a AWS instance and recorded the following results per JWS algorithm:

With 1024 bit RSA keys:

- RS256: 118 microseconds

- RS384: 173 microseconds

- RS512: 195 microseconds

With 2048 bit RSA keys:

- RS256: 382 microseconds

- RS384: 386 microseconds

- RS512: 396 microseconds

It becomes apparent that doubling the key size slows down signature verification by roughly the same factor. The overhead of the SHA-384 and SHA-512 functions is almost irrelevant in the overall computation.

Comparing token verification performance

The self-contained + RSA signed access tokens emerge as the clear winner from this benchmark, by a factor of at least ten. There is potential for further performance optimisation, by caching the access tokens that have already been verified. The payload size and the encoding method, however, has to be carefully managed, due to the general URL length restriction of about 2000 characters (which affects access tokens in the implicit OAuth 2.0 flow that are passed as an URL component).

Having said that, access tokens that are resolved by a call to the OAuth 2.0 authorisation server still have their place, in cases when the protected resource resides on the same host or LAN segment (e.g. the UserInfo endpoint in OpenID Connect).

Hybrid access tokens

The initial development version of the Connect2id server issued tokens that consumers could verify by means of a RESTful web call. To support applications further afield we decided to add support for self-contained tokens, using RSA signatures and optional encryption to verify and protect the encoded authorisation information. Applications that have received a self-contained (signed) access token can still verify it by means of a call to the OAuth 2.0 server, passing its content as an opaque string. We call these hybid access tokens.

JWS benchmark Git repo

The JWS benchmark cited in this article is available as an open source project at https://bitbucket.org/connect2id/nimbus-jose-jwt-benchmarks

Feel free to extend it with additional signature algorithms if you wish to explore other means, such as Elliptic Curve signatures, to create verifiable tokens. Comments and feedback are welcome as always.